Run flows in sequence with Orchestration Pipelines in DataStage

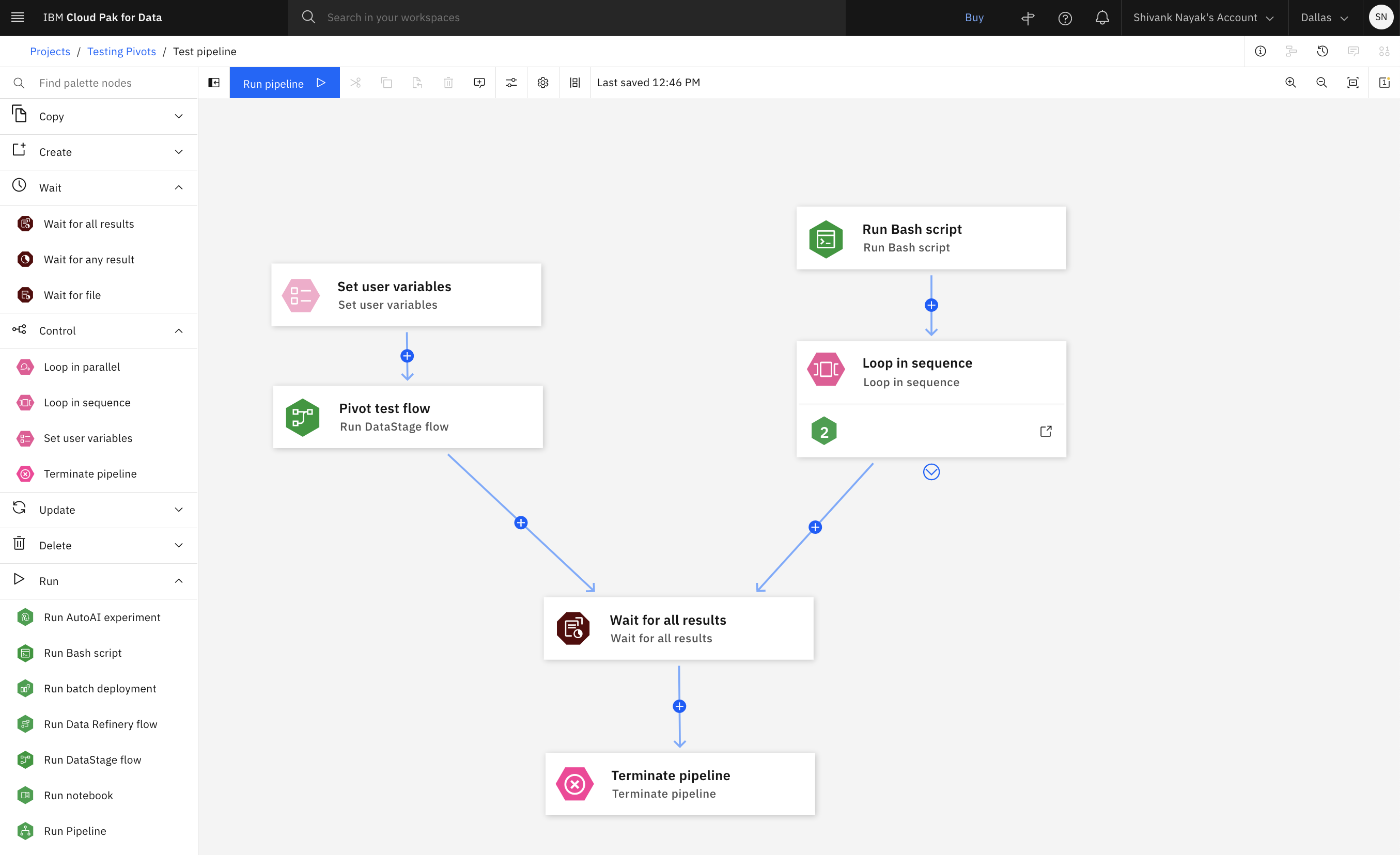

With Orchestration Pipelines, you can create a pipeline to orchestrate the running of DataStage jobs in sequence and according to conditions of your choice.

To run DataStage in a pipeline, you can create a new pipeline and add components to run a selected flow. You can also import an existing ISX file to migrate an existing DataStage sequence job.

Components

A pipeline flow is made up of node objects. Use these components to run DataStage jobs in the order of your choice, define actions to take based on job success, run scripts, send notifications, and run sections on loop. The components and features most relevant to DataStage are listed in Pipeline components for DataStage.

You can specify conditions on connections between pipeline components to determine when a flow will proceed to the next node. For more information, see Adding conditions to a pipeline. To define a complex condition, write an expression in the Expression Builder.

You can also write expressions to define user variables shared between nodes, or to define an

input to a node if the component includes the Enter expression option. The

Expression Builder uses CEL syntax and has a number of built-in functions. To reference a pipeline

component as a variable within an expression, copy the Node ID from the

node's properties or use tasks. to autofill the Node ID of the previous node in the

flow.

Parameter sets

You can add global objects such as parameter sets to a pipeline flow by clicking the Global objects icon. You can also edit the parameter set for a DataStage job in a pipeline. Click Edit parameter set for this job in the properties panel of a Run DataStage job node to select the value set to use for the job and edit values. Click the link icon to unlink a parameter value from the source set and specify a different value. Assign a parameter value to Default job configuration to use default values from the DataStage job. You can also specify parameter values as strings or expressions, select them from another node, or assign them from PROJDEF or the pipeline parameters. When the source is a parameter set, you can choose a different value set. If the parameter set exists in the pipeline, you can select Value set from pipeline parameters. Sequence jobs in traditional DataStage with parameter set value "As-pre-defined" or "Assign pipeline parameter set" are migrated to Value set from pipeline parameters.

You can also select Edit local parameters to edit the local parameters for a job. Select Default job configuration to use the default job values or Pipeline parameters to assign values if the same parameters are defined in the pipeline.

Migration

Migration requires DataStage expressions to be translated into CEL expressions. For more information about CEL and the Expression Builder, see CEL expressions and limitations in DataStage. For more information about other migration requirements and limitations, see Migrating and constructing pipeline flows for DataStage.