Adding generative chat function to your applications with the chat API

Use the watsonx.ai chat API to build conversational workflows that use foundation models to generate answers.

Ways to develop

You can build chat workflows by using these programming methods:

Alternatively, you can use graphical tools from the watsonx.ai UI to build chat workflows. See Chatting with documents and media files.

Overview

The watsonx.ai chat API implements methods for interacting with foundation models in a conversational way. You can identify different message types, such as a system prompt, user inputs, and foundation model outputs, including user-specific follow-up questions and answers. Use the chat API to mimic the workflow that you get when you interact with a foundation model from the Prompt Lab in chat mode.

Supported foundation models

To programmatically get a list of foundation models that support the chat API, specify the filters=function_text_chat parameter when you submit a List the available foundation models method request.

For API method details, see the watsonx.ai API reference documentation.

For example:

curl -X GET \

'https://{region}.ml.cloud.ibm.com/ml/v1/foundation_model_specs?version=2024-10-10&filters=function_text_chat'

For more information about foundation models that support tool-calling, see Building agent-driven workflows with the chat API.

REST API

You can use the Chat API for the following types of tasks:

For API method details, see the watsonx.ai API reference documentation.

Example of a multiple-user chat

For example, the following command submits a request to chat with the foundation model.

In the following example, replace the {url} variable with the correct value for your instance, such as us-south.ml.cloud.ibm.com. Add your own bearer token and project ID.

curl --request POST '{url}/ml/v1/text/chat?version=2024-10-08'

-H 'Authorization: Bearer eyJhbGciOiJSUzUxM...'

-H 'Content-Type: application/json'

-H 'Accept: application/json'

-d '{

"model_id": "meta-llama/llama-3-8b-instruct",

"project_id": "4947c695-a374-428c-acca-332c1a1dc9e9",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant that avoids causing harm. When you do not know the answer to a question, you say 'I don't know'."

},

{

"role": "user",

"content": [

{

"type": "text",

"text": "I have a question about Earth. How many moons does the Earth have?"

}

]

},

{

"role": "assistant",

"content": "The Earth has one natural satellite, which is simply called the Moon."

},

{

"role": "user",

"content": [

{

"type": "text",

"text": "What about Saturn?"

}

]

}

],

"max_tokens": 300,

"time_limit": 1000

}'

Sample response:

{

"id": "chat-45932923166b4607bde75207a0a9f5d4",

"model_id": "meta-llama/llama-3-8b-instruct",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "Saturn has a total of 82 confirmed moons!"

},

"finish_reason": "stop"

}

],

"created": 1728404199,

"created_at": "2024-10-08T16:16:40.102Z",

"usage": {

"completion_tokens": 12,

"prompt_tokens": 87,

"total_tokens": 99

},

"system": {

"warnings": [

{

"message": "This model is a Non-IBM Product governed by a third-party license that may impose use restrictions and other obligations. By using this model you agree to its terms as identified in the following URL.",

"id": "disclaimer_warning",

"more_info": "https://dataplatform.cloud.ibm.com/docs/content/wsj/analyze-data/fm-models.html?context=wx"

}

]

}

}

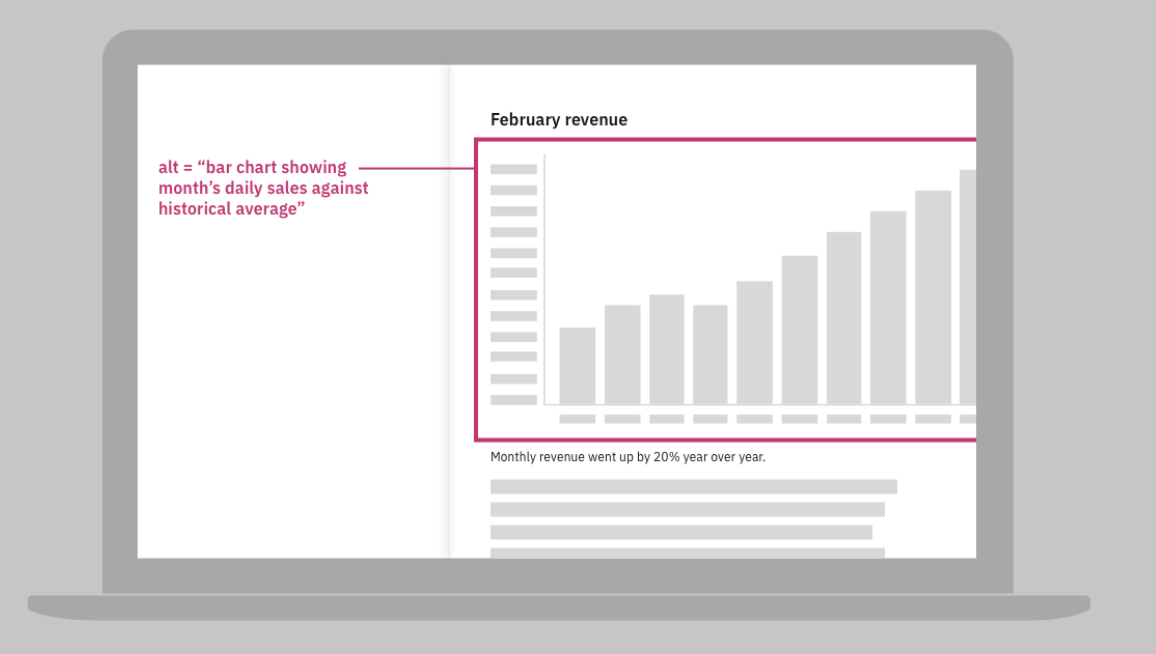

Example of chatting about an image

This example asks the model to explain what the following image illustrates.

The sample code is equivalent to chatting with an image from the Prompt Lab. For details about the alternative method that uses the UI, see Chatting with uploaded images.

Image requirements for images that you reference from the chat API are as follows:

- Add one image per chat

- Supported file types are PNG or JPEG

- One image is counted as approximately 1,200–3,000 tokens depending on the image size

For the image to be processed, you must encode the image as Base64, which converts the binary data for the image into a string of characters. You can use an online tool to convert the image or use code.

The following sample Python code encodes a hosted image. If you call the REST API from a Python notebook, you can use this code to encode the image. Then, when you define the POST request, you can specify the image_b64_encoded_string variable as the url value.

import wget, os, base64

filename = 'downloaded-image.png'

url = 'https://www.ibm.com/able/static/my-input-image.png'

if not os.path.isfile(filename):

wget.download(url, out=filename)

with open(filename, 'rb') as image_file:

image_b64_encoded_string = base64.b64encode(image_file.read()).decode('utf-8')

The following REST API request uses the chat API to chat about an image that is specified with a Base64-encoded string.

In the following example, replace the {url} variable with the correct value for your instance, such as us-south.ml.cloud.ibm.com. Add your own bearer token and project ID.

curl --request POST '{url}/ml/v1/text/chat?version=2024-10-09'

-H 'Authorization: Bearer eyJhbGciOiJSUzUxM...'

-H 'Content-Type: application/json'

-H 'Accept: application/json'

-d '{

"model_id": "meta-llama/llama-3-2-11b-vision-instruct",

"project_id": "4947c695-a374-428c-acca-332c1a1dc9e9",

"messages": [

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": "<encoded_string>"

}

},

{

"type": "text",

"text": "What does the image convey about alternative image text?"

}

]

}

],

"max_tokens": 300,

"time_limit": 10000

}'

Sample response:

{

"id": "chat-f5f3ab2b8d7f4657b72a4f868e24f3fd",

"model_id": "meta-llama/llama-3-2-90b-vision-instruct",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "The image shows a bar chart on a laptop screen with alternative image text that describes the content of the image. The alternative image text is \"bar chart showing month's daily sales against historical average.\" This text provides a clear and concise description of the image, allowing users who cannot see the image to understand its content.\n\n**Key Points:**\n\n* The image is a bar chart on a laptop screen.\n* The alternative image text describes the content of the image.\n* The alternative image text is \"bar chart showing month's daily sales against historical average.\"\n* The text provides a clear and concise description of the image.\n\n**Conclusion:**\n\nThe image conveys that alternative image text should be used to provide a clear and concise description of an image, even if the image cannot be seen. This is important for accessibility reasons, as it allows users who cannot see the image to still understand its content."

},

"finish_reason": "stop"

}

],

"created": 1728564568,

"model_version": "3.2.0",

"created_at": "2024-10-10T12:49:35.286Z",

"usage": {

"completion_tokens": 184,

"prompt_tokens": 21,

"total_tokens": 205

},

"system": {

"warnings": [

{

"message": "This model is a Non-IBM Product governed by a third-party license that may impose use restrictions and other obligations. By using this model you agree to its terms as identified in the following URL.",

"id": "disclaimer_warning",

"more_info": "https://dataplatform.cloud.ibm.com/docs/content/wsj/analyze-data/fm-models.html?context=wx"

}

]

}

}

Troubleshooting the chat API

If the response is in HTML format and mentions Gateway time-out, the request probably took too long and expired. Increase the value of the time_limit field that you specify in the request.

Python

See the ModelInference class of the watsonx.ai Python library.

To get started, see the following sample notebooks:

- Use the REST API to complete conversational tasks: Use watsonx, and mistralai/mistral-large to make simple chat conversation and tool calls

- Use the Python SDK to chat about an image: Use watsonx, and meta-llama/llama-3-2-11b-vision-instruct model for image processing to generate a description of the IBM logo

- Use the Python SDK to generate responses in JSON format while chatting with the

granite-3-3-8b-instructmodel: Use watsonx, and ibm/granite-3-3-8b-instruct to perform chat conversation with JSON response format - Use the Python SDK to chat with the

granite-3-3-8b-instructmodel and control the model's response in the following ways:- The model provides details about the reasoning used to generate a response.

- The model summarizes a topic by using content directly from the topic or paraphrasing the content in the model's own words. For details, see Use watsonx, and ibm/granite-3-3-8b-instruct to perform chat conversation with control roles.

Learn more

- Credentials for programmatic access

- Prompt Lab

- Chatting with documents and media files

- Building agent-driven workflows with the chat API

- Supported foundation models

Parent topic: Generating text with code